Deep MIB - Train Tab

Back to MIB | User interface | DeepMIB

Settings for generating and training deep convolutional networks in Microscopy Image Browser.

Overview

The Train tab in Deep MIB contains settings for designing and training deep convolutional networks. before starting, adjust the default settings to your project’s needs and ensure the output network file is specified using the button in the Network panel.

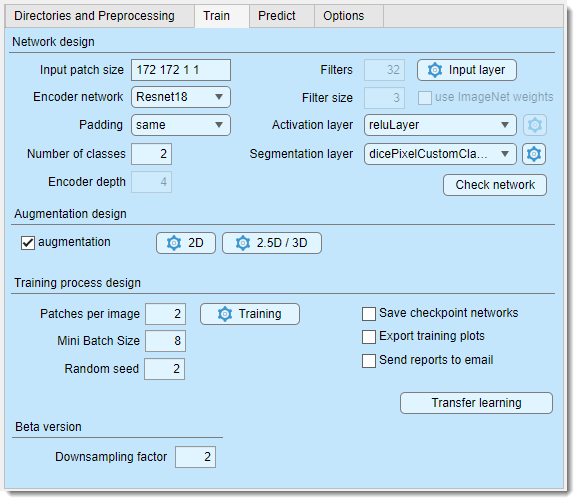

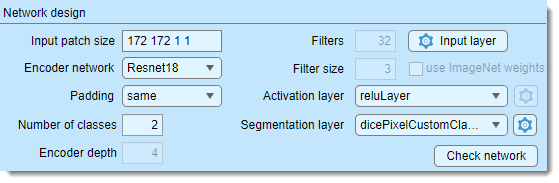

Network design

The Network design section configures the network architecture.

- defines the dimensions of image blocks

(

height, width, depth, colors) for training (e.g., "572 572 1 2" for a 572x572x1 patch with 2 color channels), Define the input patch size based on available GPU memory, desired field of view, dataset size, and channels. Patches are randomly sampled, with the count set in - selects the encoder for supported architectures, sorted from lightweight to more complex

- sets convolution padding type:

- same: adds zero padding to keep input/output sizes equal

- valid: no padding, reducing output size but minimizing edge artifacts (though same with overlap prediction also reduces artifacts).

Info

Press to verify compatibility of the input patch size with the selected padding method

- specifies the total number of materials, including

Exterior - sets the number of encoding/decoding layers in U-Net, controlling downsampling/upsampling by 2^D. Tweak with (Beta version) to adjust patch size

- defines the number of output channels (filters) in the first encoder stage, doubling per subsequent stage, mirrored in the decoder

- sets convolutional filter size (e.g., 3, 5, 7)

- configures input image normalization settings

- (MATLAB version only) initializes 2D patch-wise networks with ImageNet pretrained weights (ImageNet); requires corresponind supporting packages to be installed

- selects the activation layer type, with additional settings

via the

button when available

button when available

list of available activation layers

Compare activation layers here:

- reluLayer: Rectified Linear Unit (ReLU) layer, default activation layer

- leakyReluLayer: Leaky Rectified Linear Unit (ReLU) layer scales negative inputs

- clippedReluLayer: Clipped Rectified Linear Unit (ReLU) layer performs a threshold operation, where any input value less than zero is set to zero and any value above the clipping ceiling is set to that clipping ceiling

- eluLayer: Exponential linear unit (ELU) layer exponential nonlinearity for negatives

- swishLayer: Swish activation layer applies f(x) = x / (1+e^(-x))

- tanhLayer: Hyperbolic tangent (tanh) layer

- selects the output layer, with settings via the

button when available

button when available

List of available segmentation layers

- pixelClassificationLayer: cross-entropy loss

- focalLossLayer: focal loss for class imbalance

- dicePixelClassificationLayer: generalized Dice loss for class imbalance

- dicePixelCustomClassificationLayer: modified Dice loss for rare classes

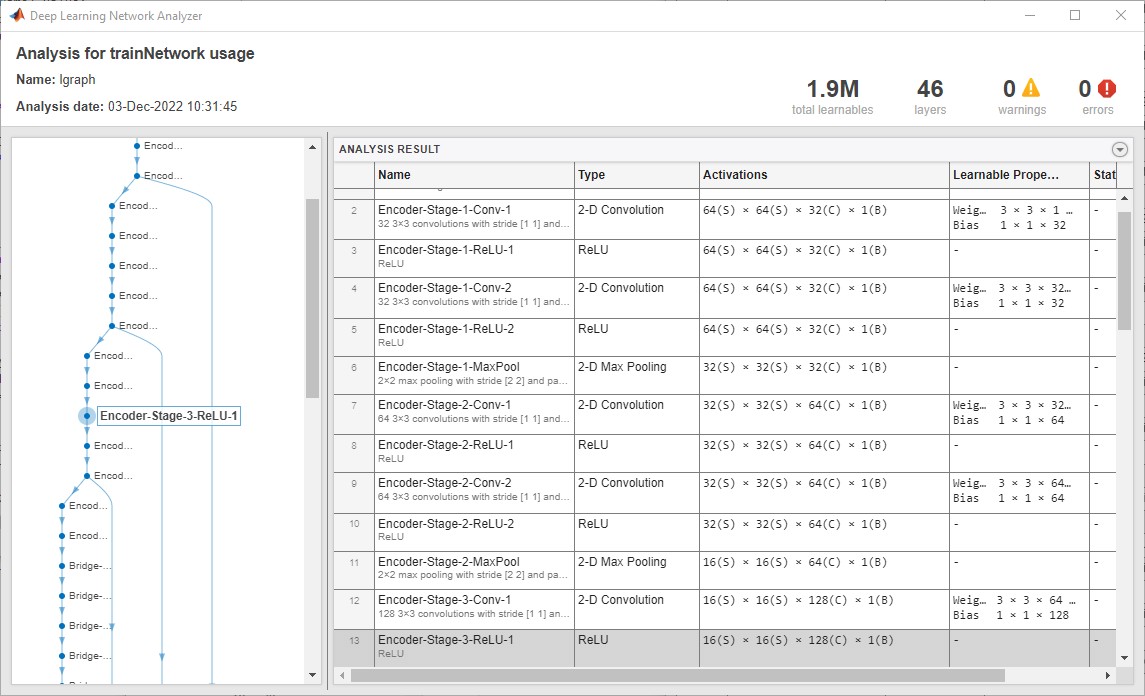

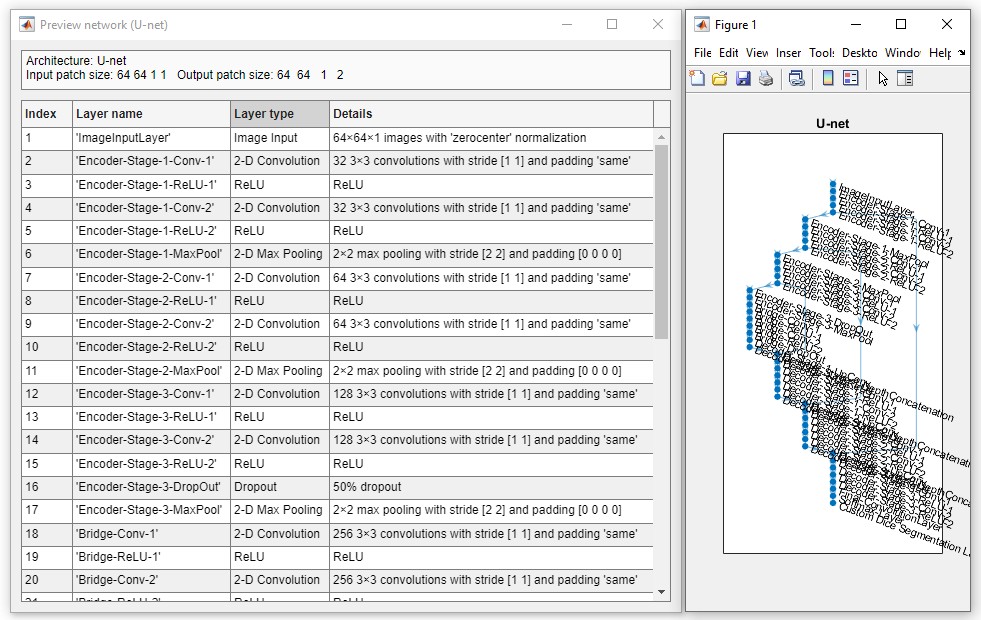

- previews and validates the network (limited info in standalone MIB)

Snapshots of the network check window for the MATLAB and standalone versions of MIB

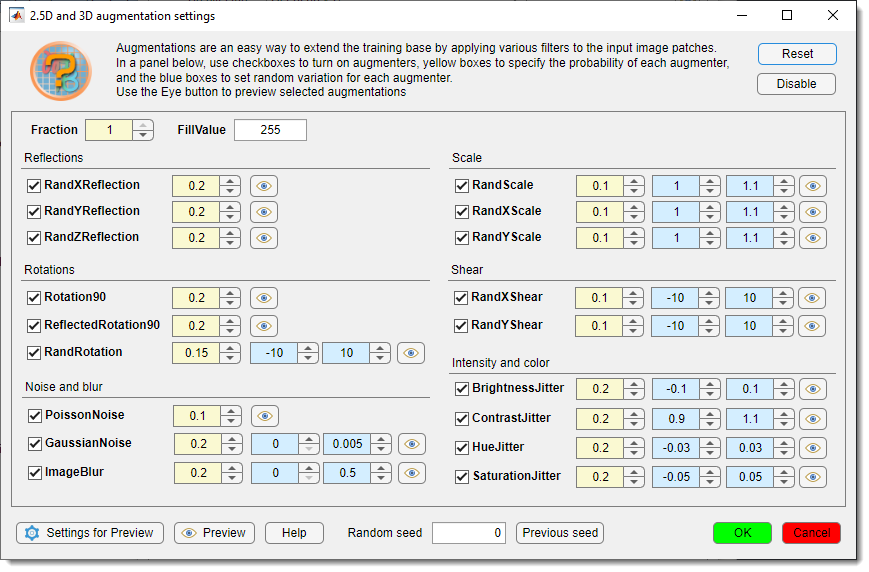

Augmentation design

Augmentation enhances training data with image processing filters (17 for 2D, 18 for 3D networks), configurable via buttons next to .

- : enables augmentation of input patches

- : sets augmentation for 2D networks (17 operations)

- : sets augmentation for 3D networks (18 operations)

Specify the fraction of patches to augment, plus probability and variation per filter. Multiple filters may apply to a patch based on probability.

2D/3D augmentation settings

Press or to open the settings dialog:

- Toggle augmentations with checkboxes

- Set (yellow) and (light blue)

- restores defaults

- turns off all augmentations

- probability of patch augmentation (1 = all, 0.5 = 50%)

- background color for downsampling/rotation (0 = black, 255 = white for 8-bit)

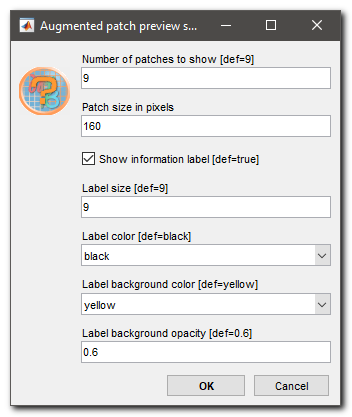

-  previews patches with augmentations, fixed or random based on (0 = random)

previews patches with augmentations, fixed or random based on (0 = random)

-  adjusts preview parameters

adjusts preview parameters

- : links to training help

- : restores the last random seed (when Random seed = 0)

- : applies settings

- : discards changes

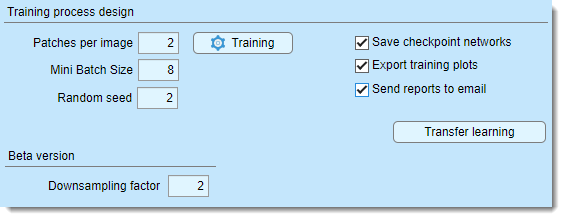

Training process design

The Training process design section configures the training process, started with .

- sets patches per image/dataset per epoch. Use 1 patch with many epochs and Shuffling: every-epoch (via ) for best results, or adjust as needed

- number of patches processed simultaneously, limited by GPU memory. Loss is averaged across the batch

- seeds the random number generator for training initialization (use any fixed value except

0for reproducibility, otherwise use0for random initialization each training attempt) - sets multiple parameters (see trainingOptions).

Tip

set Plots to "none" for up to 25% faster training

- saves checkpoints after each epoch

to

3_Results/ScoreNetwork. Resume training from checkpoints via a dialog. In R2022a or newer, it is possible to adjust frequency for saving checkpoints - saves accuracy/loss scores to

3_Results/ScoreNetworkin.score(MATLAB) and CSV formats, using the network filename - emails progress/finish updates. configure SMTP settings via the checkbox

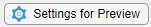

Configuration of email notifications

Configuration of email notifications

- recipient address

- SMTP server address

- server port

- enables authentication

- enables TLS/SSL

- server username (e.g., Brevo email)

- server password

(hidden, toggle Check to see the password in plain text after OK press to view)

- emails on completion

- emails progress (custom training dialog only, frequency tied to checkpoints)

- tests settings after saving with

Important!

Important: Use dedicated SMTP services (e.g., Brevo) instead of personal email accounts

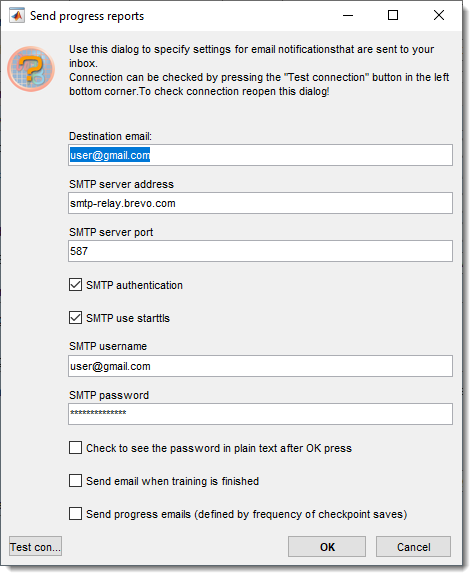

Configuration of brevo.com SMTP server

- Sign up at Brevo

- Access SMTP and API from the top-right menu:

- Click

- Copy the key to the password field in email settings

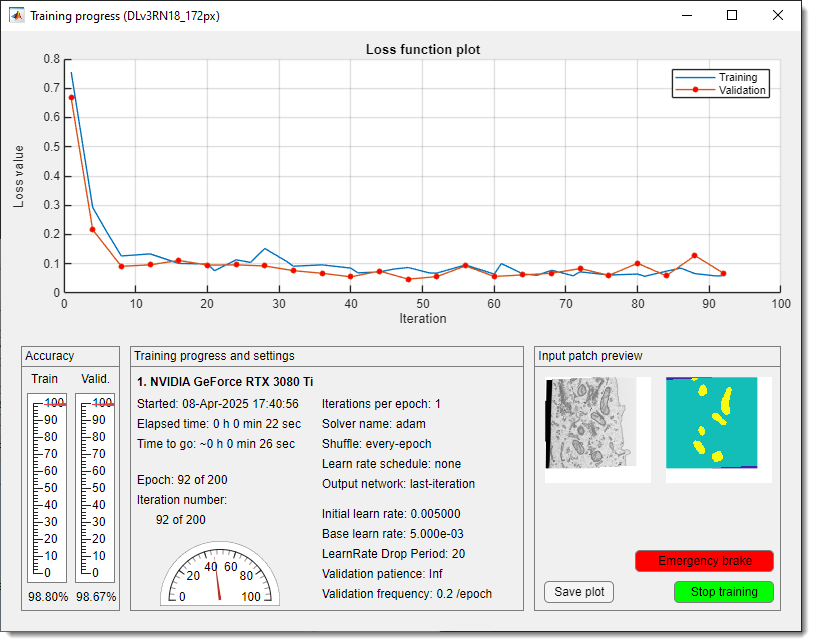

Start the training process

Click to begin. If a network file already exists

in , a dialog offers to resume training.

A .mibCfg config file is saved in the same directory, loadable via Options tab → Config files → Load.

During training, a loss function plot appears (blue = training, red = validation), with accuracy gauges at the bottom left.

Perform

Stop training with or (faster but may not finalize networks with batch normalization).

By default, Deep MIB uses a custom progress plot. If you want to use default MATLAB’s training plot (MATLAB version only),

uncheck Options tab → Custom training plot → Custom training progress window.

Disable plots for speed via Train tab → Training → Plots → none.

Preview patches (bottom right) reduce performance; adjust frequency in

Options tab → Custom training plot → Preview image patches and Fraction of images for preview (1 = all, 0.01 = 1%).

Custom DeepMIB training loss plot

After training, the network and config files are saved to the location in .

Back to MIB | User interface | DeepMIB